It seems like everyone is building an AI code review tool these days, each promising to revolutionize how we write and manage code. But after trying nearly every AI code reviewer on the market, and building one of our own, we’ve come to one undeniable conclusion - AI sucks at code reviews.

In this post, we’ll take a closer look at the real limitations of AI code reviewers—where they fall short, why human reviewers are irreplaceable, and how we can better understand what AI is actually good at.

The Problem with AI Code Reviews

False Positives & The Trust Issue

One of the biggest issues with AI code reviewers is their propensity for false positives. If you’ve ever used one, you’ve probably experienced the frustration of wading through a sea of minor suggestions or irrelevant warnings. AI models are designed to be helpful—too helpful, sometimes. They can’t help but find issues with code, even well-written, flawless code. Being helpful and verbose is baked into the model—it’s what it’s trained to do!

In building our own AI code reviewer, we’ve spent more time trying to reduce the false positives than on any other part of the system. Tricks of the trade, like forcing the LLM to make function calls to report issues or post-processing with supplemental prompts to verify relevance, can help. But these methods don’t guarantee success. By their very nature, LLMs are non-deterministic fuzzy logic systems, and getting them to do exactly what you want is incredibly difficult.

False positives create ‘alert fatigue,’ where AI’s overzealous recommendations drown out actual issues. When reviewers have to sift through trivial feedback, they lose confidence in the tool and start to ignore it. AI reviewers, by design, often prioritize quantity over quality, making their suggestions feel more like noise than helpful guidance.

Limited Codebase Context

Most AI reviewers today don’t fully understand your codebase beyond the confines of the pull request being reviewed. Some players attempt to use Retrieval-Augmented Generation (RAG) to pull in code referenced within the pull request, but this approach is fraught with challenges and rarely done well. Indexing an entire codebase and retrieving relevant data from it—which can change between branches—gets tricky, and balancing the amount of additional context against limited LLM context windows and escalating costs is a constant struggle.

As a result, very few AI reviewers use context beyond the current pull request effectively. This limits them to surface-level feedback, such as minor style issues or simple optimizations, while they miss the more crucial, high-level aspects of code quality. High-level issues—such as architectural consistency, performance considerations in the context of specific features, or understanding broader system dependencies—are precisely the areas where code reviews have the most impact and are exactly where AI reviewers' lack of context causes them to fall short.

They Don’t Understand Intent

Intent is the driving force behind every code change—something most AI reviewers are not equipped to grasp. Unlike human reviewers who can assess changes based on business priorities, project timelines, or broader goals, AI reviewers focus narrowly on lines of code. They lack the ability to see the bigger picture or understand the rationale behind certain decisions.

This gap matters because intent often guides developers to make nuanced trade-offs. For example, a developer may choose a straightforward solution that prioritizes readability over performance if the code needs to be maintained by a wide range of team members. Alternatively, a quick fix may be implemented to meet a critical deadline, with the understanding that it will be optimized later. AI, unable to weigh these factors, treats all issues with equal urgency, missing the broader context that human reviewers naturally take into account.

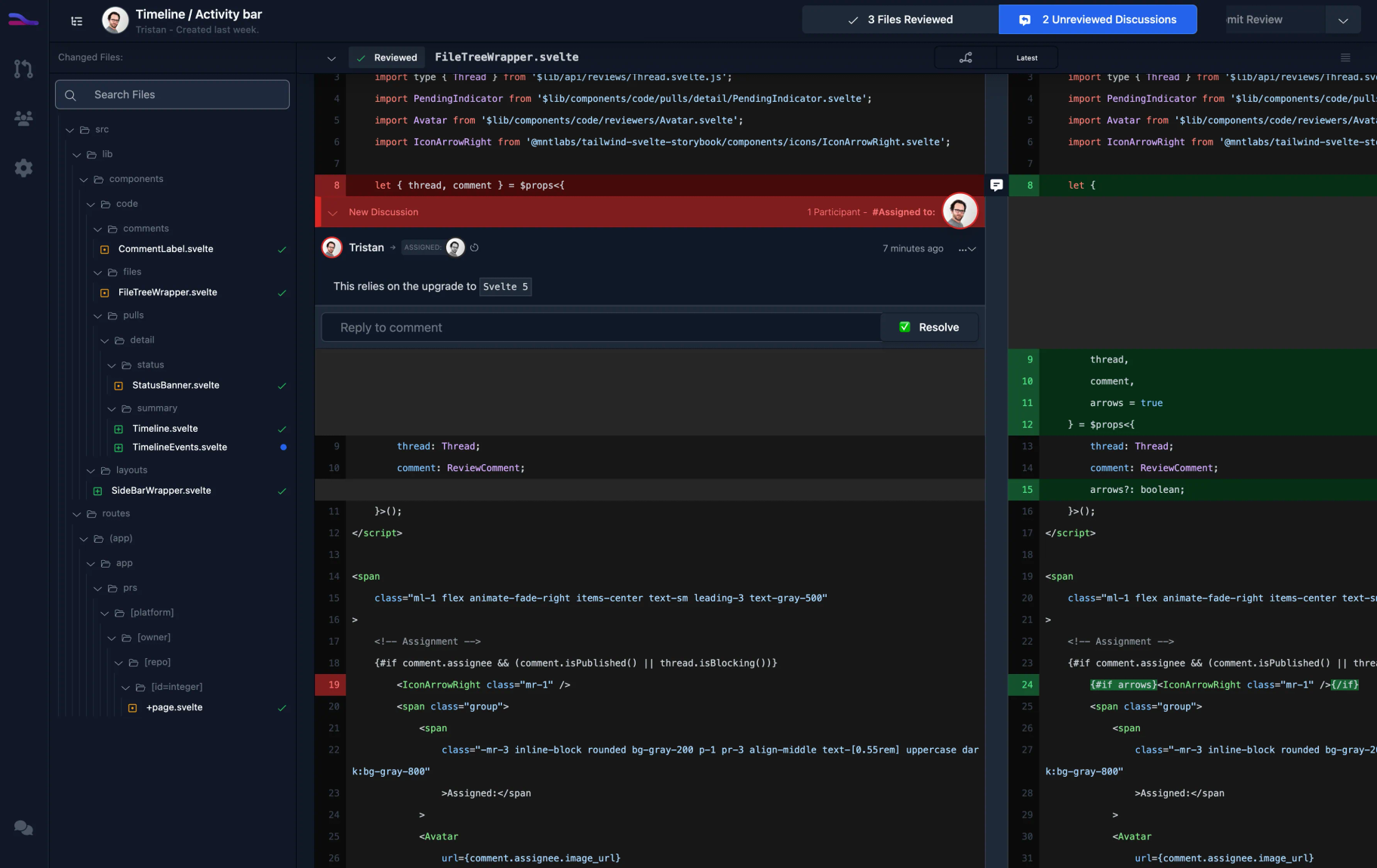

One-Way Reviews

Most AI reviewers operate as “single-shot” reviewers - quickly scanning code and attempting to spot issues. As a code author, you can’t engage them in conversation to discuss an identified issue and explain your rationale. AI reviewers are built to analyze code, not to exchange ideas or foster consensus.

For anyone who’s had constructive debates with a colleague in a code review, you know it’s often these very conversations where codebases truly improve. It’s not the NIT comments that highlight your odd preference for tabs over spaces.

Moreover, AI tools generally lack the historical context of code and previous discussions. They operate without knowing the conclusions reached in earlier reviews or ongoing team discussions. This disconnect prevents AI from recognizing when a particular segment of code has already been discussed or deemed acceptable in a previous review, either online or in team meetings.

Blind to Usage

AI reviewers lack awareness of how a particular piece of code is used within the overall application. For instance, an AI might flag a method as potentially slow without understanding that it's in a low-impact area, like an administrative feature used infrequently by a small audience. Conversely, an AI might not recognize that a seemingly innocuous query is part of a performance-critical feature, where every millisecond counts. Human reviewers, on the other hand, are well-equipped to assess the impact based on how critical the code is to the system's performance and user experience.

No Awareness of Team Dynamics

Effective code review involves more than understanding the code itself; it also requires an awareness of team dynamics. If a junior developer submits a PR, a human reviewer might scrutinize the changes more carefully, knowing they are still learning. AI cannot distinguish between a new hire and a seasoned engineer, nor can it understand team-specific nuances, like which parts of the system are particularly sensitive or have a history of issues. These nuances are critically important to the review process.

What’s Lost with AI-Only Code Reviews

AI code reviews are undeniably efficient, but they come with significant trade-offs. When we rely solely on AI to review code, we lose out on important aspects of the review process that are inherently human—things like team collaboration, knowledge sharing, mentorship, and consensus building. These elements are crucial not only for catching bugs but also for fostering growth, cohesion, and a deeper understanding among developers.

Team Collaboration and Knowledge Sharing

Human code reviews foster collaboration. They serve as a platform for developers to discuss design choices, architecture, and best practices. These discussions are invaluable and help build team cohesion and lead to incredible insights and alignment for future development. During a code review, a human reviewer might suggest an alternative design pattern, not just to improve the code but also to share knowledge with the author.

Mentorship and Skill Development

Code reviews aren’t just about shipping better code—they’re a learning opportunity. Junior developers get valuable feedback, helping them grow faster. An experienced reviewer might explain why a particular approach is more efficient or scalable, or point them to other areas of the code base to explore. AI can tell you a loop is inefficient, but it can’t provide the broader context of why a different approach might be better for this specific case.

Consensus Building and Accountability

Code reviews are also about reaching consensus—finding the best solution through discussion. AI can make suggestions, but it lacks the ability to consider different perspectives and make a balanced decision. AI approval might give human reviewers a false sense of security—“If the AI didn’t flag it, it must be fine.” This reduces accountability rather than enhancing it.

Where AI Shines in Code Reviews

Speed and Efficiency

AI code reviewers are fast. Insanely fast. They can provide feedback within seconds - something no human can match. It’s a great first pass before deeper human review.

Syntax and Style Consistency

AI is adept at catching syntax errors, enforcing code style guidelines, and ensuring conformity to company practices. Linters have done this for years, but AI can take it one step further, pointing out deprecated methods or unsafe practices based on the latest updates.

Code Standard Enforcement

AI can easily identify deviations from coding standards or detect specific patterns that violate company or industry policies. This is particularly valuable for ensuring compliance and maintaining code quality.

Ensuring Best Practices

AI can cross-check against common patterns and best practices within specific frameworks or programming languages, helping to identify outdated or deprecated methods that could be updated to more efficient or secure alternatives.

Low-Level Bugs and Security Vulnerabilities

AI is highly efficient at spotting low-level issues, such as fat finger typos, common errors, and potential vulnerabilities like hardcoded credentials or SQL injection points.

Conclusion: People Still Matter

Ultimately, the key reason human reviewers currently have the edge over AI is context. Human reviewers understand the project’s goals, the author's experience level, and the intent behind changes. Beyond that, human reviewers foster team spirit, mentorship, and accountability—qualities that no machine can replicate. They bring the nuance needed to balance competing priorities, consider the trade-offs, and ensure that the review process serves both the codebase and the team behind it. AI isn’t here to replace human reviewers just yet —it's here to complement and empower them.

We've come to believe ideal code review process harnesses the strengths of both AI and human reviewers. AI can be an efficient assistant, handling the repetitive tasks, enforcing coding standards, and spotting low-level issues quickly. It can be the always on sidekick the human reviewer can engage with to better understand code and ensure you’re following best practices.

Human reviewers, meanwhile, bring strategic thinking, deep understanding, and the ability to navigate the gray areas of intent and context. We should view AI as a powerful assistant that can handle the tedious parts of code reviews, freeing us up to focus on what really matters: thoughtful design, mentorship, and building great software together.